The Rise of AI-Driven Search Engines

In recent years, we have witnessed a rapid evolution in the ways we access information online, thanks in large part to the advent of AI-driven search engines. Technologies such as those developed by OpenAI's ChatGPT have sparked excitement and concern worldwide. As these sophisticated models become more integrated into our daily lives, they raise important questions about the accuracy and ethics of the results they produce. Among the primary concerns is their potential to perpetuate scientific racism and misinformation, issues that society is increasingly struggling to combat.

The Power and Perils of AI-Driven Searches

The allure of AI in search is undeniable. Companies like Google and Microsoft, along with ambitious startups such as Perplexity AI and You.com, are racing to build and perfect AI-driven search capabilities, envisioning a future where intelligent algorithms guide users effortlessly to the information they seek. However, this dream is not without its pitfalls. AI models, while advanced, can suffer from hallucinations—a term used in artificial intelligence to describe instances where the models produce false or misleading information. These hallucinations can be particularly insidious, as they masquerade as factual, potentially sowing seeds of misinformation.

One of the most vocal critics of this technological trend is Gary Marcus, a respected professor emeritus at New York University. Marcus argues that while AI search engines seem smart, they lack a genuine understanding of the text they generate. This inherent limitation means that the information they provide can be as much a product of the model's internal biases as it is of reality. In a digital age saturated with information, the last thing society needs is for unreliable AI content to flood the internet, muddying the waters of truth and further complicating the discernment of fact from fiction.

Shortcomings in Real-Time Search Capabilities

Traditional search engines and their AI-driven counterparts innately differ in how they handle certain types of queries. Where platforms like Google excel is in delivering real-time information—such as the latest sports scores or weather updates—a task that AI-driven engines currently struggle with. This shortfall points to a deeper issue: these models are not inherently designed to stay updated with the latest data streams. Constantly evolving information is a challenge for AI, which relies heavily on the datasets it was trained on. Without continuous updates and fine-tuning, the information they provide risks becoming outdated or incorrect.

Scientific Racism and Ethical Concerns

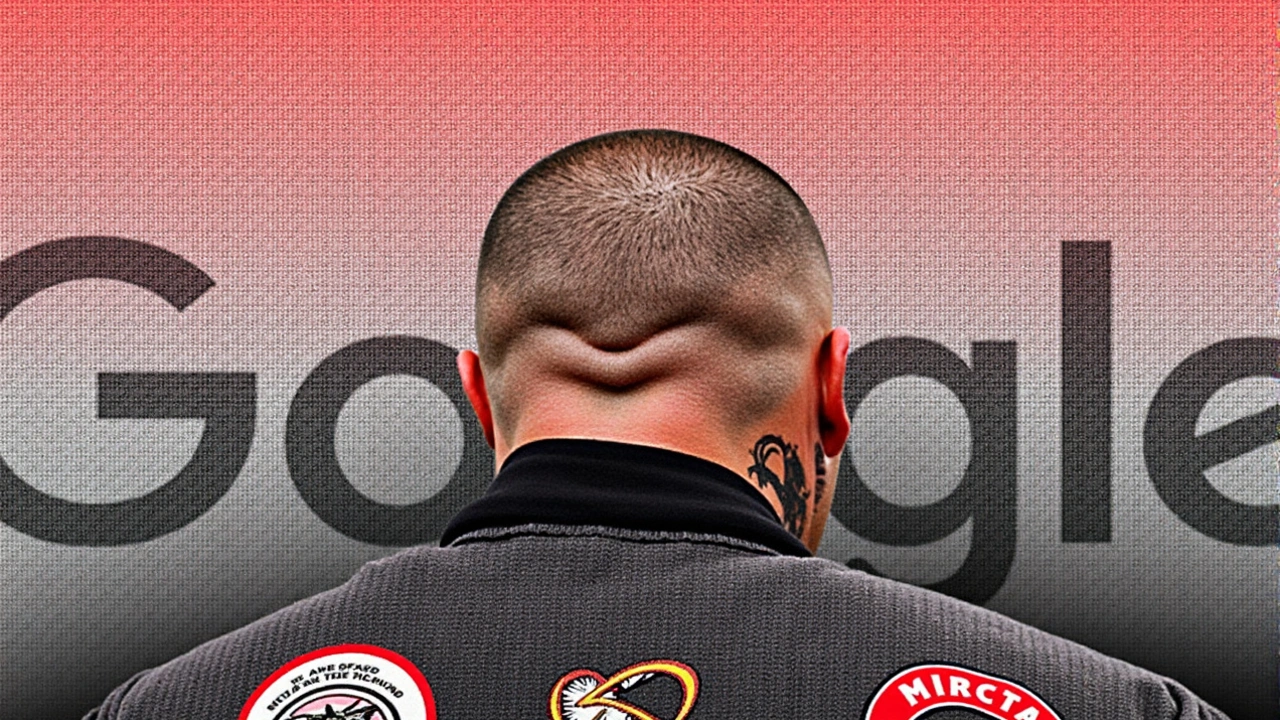

Arguably, one of the gravest concerns is the perpetuation of scientific racism through AI search engines. The biases embedded within the AI systems can reflect and reinforce prejudiced views that exist in the data they were trained on. Scientific racism—the dangerous idea that empirical evidence can justify or refute racial hierarchies—can find new life when inadvertently promoted by seemingly authoritative AI sources.

This highlights the ethical responsibility of those developing AI technologies. To mitigate these risks, organizations must diligently audit and refine their AI models to identify and eliminate biases. This endeavor requires thoughtful consideration and active engagement from a diverse group of stakeholders, including ethicists, policymakers, and representatives from marginalized communities.

The Promise of AI in Navigational and Buried Information Queries

Despite its pitfalls, AI-driven search technology shows promise in areas where traditional search engines face challenges. One such area is handling "Buried Information Queries"—searches for data obscured by layers of ads and SEO-heavy content. Here, AI's ability to parse vast amounts of information quickly can pinpoint relevant data that might otherwise remain unseen. Users benefit from a streamlined experience that delivers the information directly and efficiently, free from the clutter that often burdens conventional search results.

However, the development and refinement of these search engines must prioritize values such as accuracy, reliability, and ethics. This is essential if AI technologies are to serve as trusted public tools rather than hinderances due to their limitations. Striking a balance between technical advancement and ethical responsibility will require ongoing collaboration and transparency among those involved in the technology's progress.

Moving Forward: Recommendations and Solutions

To ensure AI-driven search engines fulfill their potential as trusted sources of information, several measures must be adopted. First, tech companies must commit to maintaining and updating their datasets to reflect the most current and reliable information. Continuous learning algorithms, which enable AI models to evolve based on new data, could help maintain the relevance and accuracy of search results.

An ethical framework governing AI development is equally vital. By setting stringent ethical standards and incorporating diverse perspectives into the development process, it is possible to minimize biases and enhance the objectivity of AI-generated content. Policymakers and regulators will play a pivotal role in shaping these standards and ensuring that tech companies adhere to them. Public awareness and education must also be prioritized, empowering users to critically evaluate the information they receive from AI-driven searches.

Conclusion: The Future of AI-Driven Search

AI-driven search engines represent a thrilling yet challenging frontier in technology. While they promise to transform how we access and interact with information, it is crucial to address their inherent flaws and ethical implications. By committing to accuracy, reliability, and ethical principles, we can harness the power of AI to create more informed, aware, and connected societies. As we move forward, the conversation must remain inclusive and dynamic, ensuring that the technological progress we pursue is as equitable and responsible as it is innovative.

Roland Baber

It's encouraging to see the conversation turning toward concrete steps for auditing AI models. By routinely checking for biased patterns and updating the training data, we can keep the systems aligned with ethical standards. The collaborative effort between developers, ethicists, and the broader community is essential for building trust. Keeping the dialogue open helps us all stay accountable and ensures that the technology serves everyone fairly.